Sunday, December 28, 2008

Major Oolong Update

http://www.oolongengine.com

I updated the memory manager, the math library, upgraded to the latest POWERVR POD format and added to each example VBO support. Please also note that in previous updates a new memory manager was added, the VFP math library was added and a bunch of smaller changes were done as well.

The things on my list are: looking into the sound manager ... it seems like the current version allocates memory in the frame and adding the DOOM III level format as a game format. Obviously zip support would be nice as well ... let's see how far I get.

Thursday, December 25, 2008

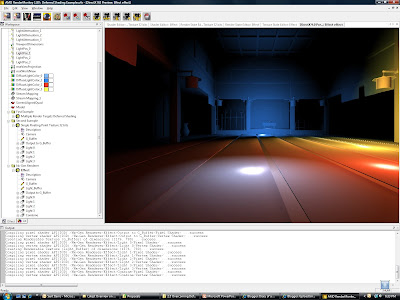

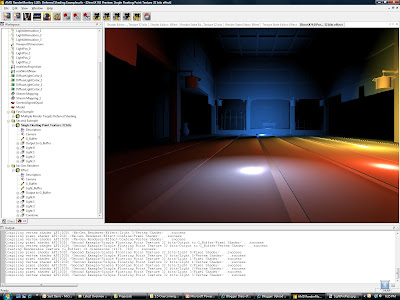

Programming Vertex, Geometry and Pixel Shaders

http://wiki.gamedev.net/index.php/D3DBook:Book_Cover

If you have any suggestions, comments or additions to this book, please give me a sign or write it into the book comment pages.

Wednesday, December 24, 2008

Good Middleware

Tuesday, December 23, 2008

Quake III Arena for the iPhone

http://code.google.com/p/quake3-iphone/

There is a list of issues. If you have more spare time than me, maybe you can help out.

iP* programming tip #8

The problem is that each event is defined by the region it happens on the screen. When the user slides his finger, he is leaving this region. In other words if you handle on-screen touches as touch is on and finger lifted is off, if the finger is moved away and then lifted, the event is still on.

The work around is that if the user slides away with his finger the previous location of this finger is used to check if the current location is in the even region. If it is not, it defaults to switch off.

Touch-screen support for a typical shooter might work like this:

In touchesBegan, touchesMoved and touchesEnd there is a function call like this:

// Enumerates through all touch objects

for (UITouch *touch in touches)

{

[self _handleTouch:touch];

touchCount++;

}

_handleTouch might look like this:

- (void)_handleTouch:(UITouch *)touch

{

CGPoint location = [touch locationInView:self];

CGPoint previousLocation;

// if we are in a touchMoved phase use the previous location but then check if the current

// location is still in there

if (touch.phase == UITouchPhaseMoved)

previousLocation = [touch previousLocationInView:self];

else

previousLocation = location;

...

// fire event

// lower right corner .. box is 40 x 40

if (EVENTREGIONFIRE(previousLocation))

{

if (touch.phase == UITouchPhaseBegan)

{

// only trigger once

if (_bitMask ^ Q3Event_Fire)

{

[self _queueEventWithType:Q3Event_Fire value1:K_MOUSE1 value2:1];

_bitMask|= Q3Event_Fire;

}

}

else if (touch.phase == UITouchPhaseEnded)

{

if (_bitMask & Q3Event_Fire)

{

[self _queueEventWithType:Q3Event_Fire value1:K_MOUSE1 value2:0];

_bitMask^= Q3Event_Fire;

}

}

else if (touch.phase == UITouchPhaseMoved)

{

if (!(EVENTREGIONFIRE(location)))

{

if (_bitMask & Q3Event_Fire)

{

[self _queueEventWithType:Q3Event_Fire value1:K_MOUSE1 value2:0];

_bitMask^= Q3Event_Fire;

}

}

}

}

...

Tracking if the switch is on or off can be done with a bit mask. The event is send off to the game with a separate _queueEventWithType method.

Sunday, December 14, 2008

iP* programming tip #7

- glEnable(GL_POINT_SPRITES_OES) - this is the global switch that turns point sprites on. Once enabled, all points will be drawn as point sprites.

- glTexEnvi(GL_POINT_SPRITES_OES, GL_COORD_REPLACE_OES, GL_TRUE) - this enables [0..1] texture coordinate generation for the four corners of the point sprite. It can be set per-texture unit. If disabled, all corners of the quad have the same texture coordinate.

- glPointParametervf(GLenum pname, const GLfloat * params) - this is used to set the point attenuation as described below.

user_clamp represents GL_POINT_SIZE_MIN and GL_POINT_SIZE_MIN settings of the glPointParametervf(). impl_clamp represents an implementation-dependent point size range.

user_clamp represents GL_POINT_SIZE_MIN and GL_POINT_SIZE_MIN settings of the glPointParametervf(). impl_clamp represents an implementation-dependent point size range.GL_POINT_DISTANCE_ATTENUATION is used to pass in params as an array containing the distance attenuation coefficients a, b, and c, in that order.

In case multisampling is used (not officially supported), the point size is clamped to have a minimum threshold, and the alpha value of the point is modulated by the following equation:

GL_POINT_FADE_THRESHOLD_SIZE specifies the point alpha fade threshold.

GL_POINT_FADE_THRESHOLD_SIZE specifies the point alpha fade threshold.Check out the Oolong engine example Particle System for an implementation. It uses 600 point sprites with nearly 60 fps. Increasing the number of point sprites to 3000 lets the framerate drop to around 20 fps.

Friday, December 12, 2008

Free ShaderX Books

http://tog.acm.org/resources/shaderx/

Thursday, December 11, 2008

iP* programming tip #6

It allows the usage of a set of matrices to transform the vertices and the normals. Each vertex has a set of indices into the palette, and a corresponding set of n weights.

The vertex is transformed by the modelview matrices specified by the vertices respective indices. These results are subsequently scaled by the weights of the respective units and then summed to create the eyespace vertex.

A similar procedure is followed for normals. They are transformed by the inverse transpose of the modelview matrix.

The main OpenGL ES functions that support Matrix Palette are

- glMatrixMode(GL_MATRIX_PALETTE) - Set the matrix mode to palette

- glCurrentPaletteMatrix(n) - Set the currently active palette matrix and loads each matrix in the palette

- To enable vertex arrays

glEnableClientState(MATRIX_INDEX_ARRAY)

glEnableClientState(WEIGHT_ARRAY) - To load the index and weight per-vertex data

glWeightPointer()

glMatrixIndexPointer()

Tuesday, December 9, 2008

Cached Shadow Maps

Yann Lombard explains on how to pick a light source first that should cast a shadow. He is using distance, intensity, influence and other parameters to pick light sources.

He has a cache of shadow maps that can have different resolutions. His cache solution is pretty generic. I would build a more dedicated cache just for shadow maps.

After having picked the light sources that should cast shadows, I would only constantly update shadows in that cache that change. This depends on if there is an object with a dynamic flag in the shadow view frustum.

If you think about it how it happens when you approach a scene with lights that cast shadows:

1. the lights are picked that are close enough and appropriate to cast shadows -> shadow maps are updated

2. then while we move on, for the lights in 1. we only update shadow maps if there is an object in shadow view that is moving / dynamic; we start than with the next bunch of shadows while the shadows in 1 are still in view

3. and so on.

Saturday, December 6, 2008

Dual-Paraboloid Shadow Maps

http://www.gamedev.net/community/forums/topic.asp?topic_id=517022

This is pretty cool. Culling stuff into the two hemispheres is obsolete here. Other than this the usual comparison between cube maps and dual-paraboloid maps applies:

- the number of drawcalls is the same ... so you do not save on this front

- you loose memory bandwidth with cube maps because in worst case you render everything into six maps that are probably bigger than 256x256 ... in reality you won't render six times and therefore have less drawcalls than dual-paraboloid maps

- the quality is much better for cube maps

- the speed difference is not that huge because dual paraboloid maps use things like texkill or alpha test to pick the right map and therefore rendering is pretty slow without Hierarchical Z.

I think both techniques are equivalent for environment maps .. for shadows you might prefer cube maps; if you want to save memory dual-paraboloid maps is the only way to go.

Update: just saw this article on dual-paraboloid shadow maps:

http://osman.brian.googlepages.com/dpsm.pdf

The basic idea is that you do the WorldSpace -> Paraboloid transformation in the pixel shader during your lighting pass. That avoids having the paraboloid co-ordinates interpolated incorrectly.

iP* programming tip #5

Overall the iP* platform supports

- The maximum texture size is 1024x1024

- 2D texture are supported; other texture formats are not

- Stencil buffers aren’t available

Texture filtering is described on page 99 of the iPhone Application programming guide. There is also an extension for anisotropic filtering supported, that I haven't tried.

The pixel shader of the iP* platform is programmed via texture combiners. There is an overview on all OpenGL ES 1.1 calls at

http://www.khronos.org/opengles/sdk/1.1/docs/man/

The texture combiners are described in the page on glTexEnv. Per-Pixel Lighting is a popular example:

glTexEnvf(GL_TEXTURE_ENV,

// N.L

.. GL_TEXTURE_ENV_MODE, GL_COMBINE);

.. GL_COMBINE_RGB, GL_DOT3_RGB); // Blend0 = N.L

.. GL_SOURCE0_RGB, GL_TEXTURE); // normal map

.. GL_OPERAND0_RGB, GL_SRC_COLOR);

.. GL_SOURCE1_RGB, GL_PRIMARY_COLOR); // light vec

.. GL_OPERAND1_RGB, GL_SRC_COLOR);

// N.L * color map

.. GL_TEXTURE_ENV_MODE, GL_COMBINE);

.. GL_COMBINE_RGB, GL_MODULATE); // N.L * color map

.. GL_SOURCE0_RGB, GL_PREVIOUS); // previous result: N.L

.. GL_OPERAND0_RGB, GL_SRC_COLOR);

.. GL_SOURCE1_RGB, GL_TEXTURE); // color map

.. GL_OPERAND1_RGB, GL_SRC_COLOR);

Check out the Oolong example "Per-Pixel Lighting" in the folder Examples/Renderer for a full implementation.

Friday, December 5, 2008

iP* programming tip #4

// “View” for games in applicationDidFinishLaunching

// get screen rectangle

CGRect rect = [[UIScreen mainScreen] bounds];

// create one full-screen window

_window = [[UIWindow alloc] initWithFrame:rect];

// create OpenGL view

_glView = [[EAGLView alloc] initWithFrame: rect pixelFormat:GL_RGB565_OES depthFormat:GL_DEPTH_COMPONENT16_OES preserveBackBuffer:NO];

// attach the view to the window

[_window addSubView:_glView];

// show the window

[_window makeKeyAndVisible];

The screen dimensions are retrieved from a screen object. Erica Sadun compares the UIWindow functionality to a TV set and the UIView to actors in a TV show. I think this is a good way to memorize the functionality. In our case EAGLView, that comes with the Apple SDK, inherits from UIView and adds all the OpenGL ES functionality to it. We attach this view than to the window and make everything visible.

Oolong assumes a full-screen window that does not rotate. It is always in widescreen view. The reason for this is that otherwise the accelerometer usage -to drive a camera with the accelerometer for example- wouldn't be possible.

There is a corresponding dealloc method to this code that frees all the allocated resources again.

The anatomy of a Oolong engine example uses mainly two files. A file with "delegate" in the name and the main application file. The main application file has the following methods:

- InitApplication()

- QuitApplication()

- UpdateScene()

- RenderScene()

The first pair of methods do one-time device dependent resource allocations and deallocations, while the UpdateScene() prepares scene rendering and the last method actually does what the name says. If you would like to extend this framework to handle orientation changes, you would add a pair of methods with names like InitView() and ReleaseView() and handle all orientation dependent code in there. Those methods would always been called when the orientation changes -only once- and at the start of the application.

One other basic topic is the usage of C++. In Apple speak this is called Objective-C++. Cocoa Touch wants to be addressed with Obj-C. So native C or C++ code is not possible. For game developers there is lots of existing C/C++ code to be re-used and its usage makes games easier to port to several platforms (quite common to launch an IP on several platforms at once). The best solution to this dilemma is to use Objective-C where necessary and then wrap to C/C++.

If a file has the postfix *.mm, the compiler can handle Objective-C, C and C++ code pieces at the same time to a certain degree. If you look in Oolong for files with such a postfix you will find many of them. There are whitepapers and tutorials available for Objective-C++ that describe the limitations of the approach. Because garbage collection is not used on the iP* device I want to believe that the challenges to make this work on this platform are smaller. Here are a few examples on how the bridge between Objective-C and C/C++ is build in Oolong. In our main application class in every Oolong example we bridge from the Objective-C code used in the "delegate" file to the main application file like this:

// in Application.h

class CShell

{

..

bool UpdateScene();

// in Application.mm

bool CShell::UpdateScene()

..

// in Delegate.mm

static CShell *shell = NULL;

if(!shell->Update()) printf(“Update error\n”);

An example on how to call an Objective-C method from C++ can look like this (C wrapper):

// in PolarCamera.mm -> C wrapper

void UpdatePolarCamera()

{

[idFrame UpdateCamera];

}

-(void) UpdateCamera

{

..

// in Application.mm

bool Cshell::UpdateScene()

{

UpdatePolarCamera();

..

The idea is to retrieve the id for a class and then use this id to address a function in the class from the outside.

If you want to see all this in action, open up the skeleton example in the Oolong Engine source code. You can find it at

Examples/Renderer/Skeleton

Now that we are at the end of this tip I would like to refer to a blog that my friend Canis wrote. He talks about memory management here. This blog entry applies to the iP* platforms quite well:

http://www.wooji-juice.com/blog/cocoa-6-memory.html

Wednesday, December 3, 2008

iP* programming tip #3

- .app folder holds everything without required hierarchy

- .lproj language support

- Executable

- Info.plist – XML property list holds product identifier > allows communicate with other apps and register with Springboard

- Icon.png (57x57) set UIPrerenderedIcon to true in Info.plist to not receive the gloss / shiny effect

- Default.png … should match game background; no “Please wait” sign ... smooth fade

- XIB (NIB) files precooked addressable user interface classes >remove NSMainNibFile key from Info.plist if you do not use it

- Your files; for example in demoq3/quake3.pak

Every iP* app is sandboxed. That means that only certain folders, network resources and hardware can be accessed. Here is a list of folders that might be affected by your application:

- Preferences files are in var/mobile/Library/Preferences based on the product identifier (e.g. com.engel.Quake.plist); updated when you use something like NSUserDefaults to add persistance to game data like save and load

- App plug-in /System/Library (not available)

- Documents in /Documents

- Each app has a tmp folder

- Sandbox spec e.g. in /usr/share/sandbox > don’t touch

Tuesday, December 2, 2008

HLSL 5.0 OOP / Dynamic Shader Linking

iP* programming tip #2

Here are a few starting points to get used to the environment:

- To work in one window only, use the "All-in-One" mode if you miss Visual Studio (http://developer.apple.com/tools/xcode/newinxcode23.html)

You have to load Xcode, but not load any projects. Go straight to Preferences/General Tab, and you'll see "Layout: Default". Switch that to "Layout: All-In-One". Click OK. Then, you can load your projects. - Apple+tilde – cycle between windows in the foreground

- Apple+w - closes the front window in most apps

- Apple+tab – cycle through windows

For everyone who prefers hotkeys to start applications you might check out Quicksilver. Automatically hiding and showing the Dock gives you more workspace. If you are giving presentations about your work, check out Stage Hand for the iPod touch / iPhone.

For reference you should have POWERVR SDK for Linux downloaded. It is a very helpful reference regarding the MBX chip in your target platforms.

Not very game or graphics programming related but very helpful is Erica Sadun's book "The iPhone Developer's Cookbook". She does not waste your time with details you are not interested in and comes straight to the point. Just reading the first section of the book is already pretty cool.

You want to have this book if you want to dive into any form of Cocoa interface programming.

The last book I want to recommend is Andrew M. Duncan's "Objective-C Pocket Reference". I have this usually lying on my table if I stumble over Objective-C syntax. If you are a C/C++ programmer you probably do not need more than this. There are also Objective-C tutorials on the iPhone developer website and on the general Apple website.

If you have any other tip that I can add to the website I would mention it with your name.

The next iP* programming tip will be more programming related ... I promise :-)

Sunday, November 30, 2008

iP* programming tip #1

Starting iPhone development requires first the knowledge of the underlying hardware and what it can do for you. Here are the latest hardware specs I am aware of (a rumour was talking about iPods that run the CPU with 532 MHz ... I haven't found any evidence for this).

- GPU: PowerVR MBXLite with VGPLite with 103 Mhz

- ~DX8 hardware with vs_1_1 and ps_1_1 functionality

- Vertex shader is not exposed

- Pixel shader is programmed with texture combiners

- 16 MB VRAM – not mentioned anywhere

- CPU: ARM 1176 with 412 Mhz (can do 600 Mhz)

- VFP unit 128-bit Multimedia unit ~= SIMD unit

- 128 MB RAM; only 24 MB for apps allowed

- 320x480 px at 163 ppi screen

- LIS302DL, a 3-axis accelerometer with 412 Mhz (?) update rate

- Multi-Touch: up to five fingers

- PVRTC texture compression: color map 2-bit per pixel and normal map 4-bit per-pixel

I wonder how the 16 MB VRAM are handled. I assume that this is the place where the VBO and textures are stored. Regarding the max size of apps of 24 MB; I wonder what happens if an application generates geometry and textures dynamically ... when does the sandbox of the iPhone / iPod touch stop the application. I did not find any evidence for this.

WARP - Running DX10 and DX11 Games on CPUs

http://msdn.microsoft.com/en-us/library/dd285359.aspx

Running Crysis on a 8 core CPU with a resolution of 800x600 at 7.2 fps is an achievement. If this would be hand-optimized very well, it would be the best way to write code for. 4 - 8 cores will be a common target platform in the next two years. Because it can be switched off if there is a GPU, this is a perfect target for game developers. What this means is that you can write a game with the DirectX 10 API and not only target all the GPUs out there but also machines without GPU ... this is one of the best developments for the PC market since a long time. I am excited!

The other interesting consequence from this development is: if INTELs "Bread & Butter" chips run games with the most important game API, it would be a good idea if INTEL would put a bunch of engineers behind this and optimize WARP (in case they haven't already done so). This is the big game market consisting of games like "The Sims" and "World of Warcraft" and similar games that we are talking about here. The high-end PC gaming market is much smaller.

Thursday, November 6, 2008

iPhone ARM VFP code

void MatrixMultiplyF(

MATRIXf &mOut,

const MATRIXf &mA,

const MATRIXf &mB)

{

#if 0

MATRIXf mRet;

/* Perform calculation on a dummy matrix (mRet) */

mRet.f[ 0] = mA.f[ 0]*mB.f[ 0] + mA.f[ 1]*mB.f[ 4] + mA.f[ 2]*mB.f[ 8] + mA.f[ 3]*mB.f[12];

mRet.f[ 1] = mA.f[ 0]*mB.f[ 1] + mA.f[ 1]*mB.f[ 5] + mA.f[ 2]*mB.f[ 9] + mA.f[ 3]*mB.f[13];

mRet.f[ 2] = mA.f[ 0]*mB.f[ 2] + mA.f[ 1]*mB.f[ 6] + mA.f[ 2]*mB.f[10] + mA.f[ 3]*mB.f[14];

mRet.f[ 3] = mA.f[ 0]*mB.f[ 3] + mA.f[ 1]*mB.f[ 7] + mA.f[ 2]*mB.f[11] + mA.f[ 3]*mB.f[15];

mRet.f[ 4] = mA.f[ 4]*mB.f[ 0] + mA.f[ 5]*mB.f[ 4] + mA.f[ 6]*mB.f[ 8] + mA.f[ 7]*mB.f[12];

mRet.f[ 5] = mA.f[ 4]*mB.f[ 1] + mA.f[ 5]*mB.f[ 5] + mA.f[ 6]*mB.f[ 9] + mA.f[ 7]*mB.f[13];

mRet.f[ 6] = mA.f[ 4]*mB.f[ 2] + mA.f[ 5]*mB.f[ 6] + mA.f[ 6]*mB.f[10] + mA.f[ 7]*mB.f[14];

mRet.f[ 7] = mA.f[ 4]*mB.f[ 3] + mA.f[ 5]*mB.f[ 7] + mA.f[ 6]*mB.f[11] + mA.f[ 7]*mB.f[15];

mRet.f[ 8] = mA.f[ 8]*mB.f[ 0] + mA.f[ 9]*mB.f[ 4] + mA.f[10]*mB.f[ 8] + mA.f[11]*mB.f[12];

mRet.f[ 9] = mA.f[ 8]*mB.f[ 1] + mA.f[ 9]*mB.f[ 5] + mA.f[10]*mB.f[ 9] + mA.f[11]*mB.f[13];

mRet.f[10] = mA.f[ 8]*mB.f[ 2] + mA.f[ 9]*mB.f[ 6] + mA.f[10]*mB.f[10] + mA.f[11]*mB.f[14];

mRet.f[11] = mA.f[ 8]*mB.f[ 3] + mA.f[ 9]*mB.f[ 7] + mA.f[10]*mB.f[11] + mA.f[11]*mB.f[15];

mRet.f[12] = mA.f[12]*mB.f[ 0] + mA.f[13]*mB.f[ 4] + mA.f[14]*mB.f[ 8] + mA.f[15]*mB.f[12];

mRet.f[13] = mA.f[12]*mB.f[ 1] + mA.f[13]*mB.f[ 5] + mA.f[14]*mB.f[ 9] + mA.f[15]*mB.f[13];

mRet.f[14] = mA.f[12]*mB.f[ 2] + mA.f[13]*mB.f[ 6] + mA.f[14]*mB.f[10] + mA.f[15]*mB.f[14];

mRet.f[15] = mA.f[12]*mB.f[ 3] + mA.f[13]*mB.f[ 7] + mA.f[14]*mB.f[11] + mA.f[15]*mB.f[15];

/* Copy result in pResultMatrix */

mOut = mRet;

#else

#if (TARGET_CPU_ARM)

const float* src_ptr1 = &mA.f[0];

const float* src_ptr2 = &mB.f[0];

float* dst_ptr = &mOut.f[0];

asm volatile(

// switch on ARM mode

// involves uncoditional jump and mode switch (opcode bx)

// the lowest bit in the address signals whether are (bit cleared)

// or tumb should be selected (bit set)

".align 4 \n\t"

"mov r0, pc \n\t"

"bx r0 \n\t"

".arm \n\t"

// set vector length to 4

// example fadds s8, s8, s16 means that the content s8 - s11

// is added to s16 - s19 and stored in s8 - s11

"fmrx r0, fpscr \n\t" // loads fpscr status reg to r4

"bic r0, r0, #0x00370000 \n\t" // bit clear stride and length

"orr r0, r0, #0x00030000 \n\t" // set length to 4 (11)

"fmxr fpscr, r0 \n\t" // upload r4 to fpscr

// Note: this stalls the FPU

// result[0][1][2][3] = mA.f[0][0][0][0] * mB.f[0][1][2][3]

// result[0][1][2][3] = result + mA.f[1][1][1][1] * mB.f[4][5][6][7]

// result[0][1][2][3] = result + mA.f[2][2][2][2] * mB.f[8][9][10][11]

// result[0][1][2][3] = result + mA.f[3][3][3][3] * mB.f[12][13][14][15]

// s0 - s31

// if Fd == s0 - s7 -> treated as scalar all the other treated like vector

// load the whole matrix into memory - transposed -> second operand first

"fldmias %2, {s8-s23} \n\t"

// load first column to scalar bank

"fldmias %1!, {s0 - s3} \n\t"

// first column times matrix

"fmuls s24, s8, s0 \n\t"

"fmacs s24, s12, s1 \n\t"

"fmacs s24, s16, s2 \n\t"

"fmacs s24, s20, s3 \n\t"

// save first column

"fstmias %0!, {s24-s27} \n\t"

// load second column to scalar bank

"fldmias %1!, {s4-s7} \n\t"

// second column times matrix

"fmuls s28, s8, s4 \n\t"

"fmacs s28, s12, s5 \n\t"

"fmacs s28, s16, s6 \n\t"

"fmacs s28, s20, s7 \n\t"

// save second column

"fstmias %0!, {s28-s31) \n\t"

// load third column to scalar bank

"fldmias %1!, {s0-s3} \n\t"

// third column times matrix

"fmuls s24, s8, s0 \n\t"

"fmacs s24, s12, s1 \n\t"

"fmacs s24, s16, s2 \n\t"

"fmacs s24, s20, s3 \n\t"

// save third column

"fstmias %0!, {s24-s27} \n\t"

// load fourth column to scalar bank

"fldmias %1!, {s4-s7} \n\t"

// fourth column times matrix

"fmuls s28, s8, s4 \n\t"

"fmacs s28, s12, s5 \n\t"

"fmacs s28, s16, s6 \n\t"

"fmacs s28, s20, s7 \n\t"

// save fourth column

"fstmias %0!, {s28-s31} \n\t"

// reset vector length to 1

"fmrx r0, fpscr \n\t" // loads fpscr status reg to r4

"bic r0, r0, #0x00370000 \n\t" // bit clear stride and length

"fmxr fpscr, r0 \n\t" // upload r4 to fpscr

// switch to tumb mode

// lower bit of destination is set to 1

"add r0, pc, #1 \n\t"

"bx r0 \n\t"

".thumb \n\t"

// binds variables to registers

: "=r" (dst_ptr), "=r" (src_ptr1), "=r" (src_ptr2)

: "0" (dst_ptr), "1" (src_ptr1), "2" (src_ptr2)

: "r0"

);

#endif

#endif

}

Monday, October 20, 2008

Midnight Club: Los Angeles

Thursday, October 16, 2008

Hardware GPU / SPU / CPU

Thursday, October 2, 2008

S3 Graphics Chrome 440 GTX

I just went through the DirectX 10 SDK examples. Motion Blur is about 5.8 fps and NBodyGravity is about 1.8 fps. The instancing example runs with 11.90 fps. I use the VISTA 64-bit beta drivers 7.15.12.0217-18.05.03. The other examples run fast enough. The CPU does not seem to become overly busy.

Just saw that there is a newer driver. The latest driver which is WHQL'ed has the version number 248. The motion blur example runs with 6.3 fps with some artefacts (the beta driver had that as well), Instancing ran with 11.77 fps and the NBodyGravity example with 1.83 fps ... probably not an accurate way to measure this stuff at all but at least it gives a rough idea.

The integrated INTEL chip 4500 MHD in my notebook is slower than this but then it supports at least DX10 and the notebook is super light :-) ... for development it just depends for me on the feature support (Most of the time I prototype effects on PCs).

While playing around with the two chipsets I just found out that the mobile INTEL chip also runs the new DirectX 10.1 SDK example Depth of Field with more than 20 fps. This is quite impressive. The Chrome 440 GTX is running this example with more than 100 fps. The new Raycast Terrain example runs with 19.6 fps on the Chrome and with less 7.6 fps on the Mobile INTEL chip set. The example that is not running on the Mobile INTEL chip is the ProceduralMaterial example. It runs with less than 1 fps on the Chrome 440 GTX.

Nevertheless it seems like both companies did their homework with the DirectX SDK.

So I just ran a bunch of ShaderX7 example programs against the cards. While the INTEL Mobile chip shows errors in some of the DirectX9 examples and crashes in some of the DirectX 10 stuff, the Chrome seems to even take the DirectX 10.1 examples that I have, that usually only run on ATI hardware ... nice!

One thing that I haven't thought of is GLSL support. I thought that only ATI and NVIDIA have GLSL support but S3 seems to have it as well. INTEL's mobile chip does not have it so ...

I will try out the 3D Futuremark Vantage Benchmark. It seems a Chrome 400 Series is in there with a score of 222. Probably not too bad considering the fact that they probably not pay Futuremark for being a member of their program.

Update October 4th: the S3 Chrome 440 GTX did 340 as the Graphics score in the trial version of the 3D Mark Vantage.

Wednesday, October 1, 2008

Old Interview

Tuesday, September 30, 2008

64-bit VISTA Tricks

Sunday, September 28, 2008

Light Pre-Pass: More Blood

N.H^n = (N.L * N.H^n * Att) / (N.L * Att)

This division happens in the forward rendering path. The light source has its own shininess value in there == the power n value. With the specular component extracted, I can apply the material shininess value like this.

(N.H^n)^nm

Then I can re-construct the Blinn-Phong lighting equation. The data stored in the Light Buffer is treated like one light source. As a reminder, the first three channels of the light buffer hold:

N.L * Att * DiffuseColor

Color = Ambient + (LightBuffer.rgb * MatDiffInt) + MatSpecInt * (N.H^n)^mn * N.L * Att

So how could I do this :-)

N.H^n = (N.L * N.H^n * Att) / (N.L * Att)

N.L * Att is not in any channel of the Light buffer. How can I get this? The trick here is to convert the first three channels of the Light Buffer to luminance. The value should be pretty close to N.L * Att.

This also opens up a bunch of ideas for different materials. Every time you need the N.L * Att term you replace it with luminance. This should give you a wide range of materials.

The results I get are very exciting. Here is a list of advantages over a Deferred Renderer:

- less cost per light (you calculate much less in the Light pass)

- easier MSAA

- more material variety

- less read memory bandwidth -> fetches only two instead of the four textures it takes in a Deferred Renderer

- runs on hardware without ps_3_0 and MRT -> runs on DX8.1 hardware

Sunday, September 21, 2008

Shader Workflow - Why Shader Generators are Bad

If you define shader permutations as having lots of small differences but using the same code than you have to live with the fact that whatever is send to the hardware is a full-blown shader, even if you have exactly the same skinning code in every other shader.

So the end result is always the same ... whatever you do on the level above that.

What I describe is a practical approach to handle shaders with a high amount of material variety and a good workflow.

Shaders are some of the most expensive assets in production value and time spend of the programming team. They need to be the highest optimized piece of code we have, because it is much harder to squeeze out performance from a GPU than from a CPU.

Shader generators or a material editor (.. or however you call it) are not an appropriate way to generate or handle shaders because they are hard to maintain, offer not enough material variety and are not very efficient because it is hard to hand optimize code that is generated on the fly.

This is why developers do not use them and do not want to use them. It is possible that they play a role in indie or non-profit development so because those teams are money and time constraint and do not have to compete in the AAA sector.

In general the basic mistake people make that think that ueber-shaders or material editors or shader generators would make sense is that they do not understand how to program a graphics card. They assume it would be similar to programming a CPU and therefore think they could generate code for those cards.

It would make more sense to generate code on the fly for CPUs (... which also happens in the graphics card drivers) and at other places (real-time assemblers) than for GPUs because GPUs do not have anything close to linear performance behaviours. The difference between a performance hotspot and a point where you made something wrong can be 1:1000 in time (following a presentation from Matthias Wloka). You hand optimize shaders to hit those hotspots and the way you do it is that you analyze the results provided by PIX and other tools to find out where the performance hotspot of the shader is.

Thursday, September 18, 2008

ARM VFP ASM development

here

The idea is to have a math library that is optimized for the VFP unit of an ARM processor. This should be useful on the iPhone / iPod touch.

Friday, September 12, 2008

More Mobile Development

Depending on how easy it will be to get Oolong running on this I will extend Oolong to support this platform as well.

Wednesday, September 10, 2008

Shader Workflow

Setting up a good shader workflow is easy. You just setup a folder that is called shaderlib, then you setup a folder that is called shader. In shaderlib there are files like lighting.fxh, utility.fxh, normals.fxh, skinning.fxh etc. and in the directory shader there are files like metal.fx, skin.fx, stone.fx, eyelashes.fx, eyes.fx. In each of those *.fx files there is a technique for whatever special state you need. You might have in there techniques like lit, depthwrite etc..

All the "intelligence" is in the shaderlib directory in the *.fxh files. The fx files just stitch together function calls. The HLSL compiler resolves those function calls by inlining the code.

So it is easy to just send someone the shaderlib directory with all the files in there and share your shader code this way.

In the lighting.fxh include file you will have all kinds of lighting models like Ashikhmin-Shirley, Cook-Torrance or Oren-Nayar and obviously Blinn-Phong or just a different BRDF that can mimic a certain material especially good. In normals.fxh you have routines that can fetch normals in different ways and unpack them. Obviously all the DXT5 and DXT1 tricks are in there but also routines that let you fetch height data to generate normals from it. In utility.fxh you have support for different color spaces, special optimizations for different platforms, like special texture fetches etc. In skinning.fxh you have all code related to skinning and animation ... etc.

If you give this library to a graphics programmer he obviously has to put together the shader on his own but he can start looking at what is requested and use different approaches to see what fits best for the job. He does not have to come up with ways on how to generate a normal from height or color data or how to deal with different color spaces.

For a good, efficient and high quality workflow in a game team, this is what you want.

Tuesday, September 9, 2008

Calculating Screen-Space Texture Coordinates for the 2D Projection of a Volume

1. Transforming position into projection space is done in the vertex shader by multiplying the concatenated World-View-Projection matrix.

2. The Direct3D run-time will now divide those values by Z; stored in the W component. The resulting position is then considered in clipping space, where the x and y value is clipped to the [-1.0, 1.0] range.

xclip = xproj / wproj

yclip = yproj / wproj

3. Then the Direct3D run-time transforms position into viewport space from the value range [-1.0, 1.0] to the range [0.0, ScreenWidth/ScreenHeight].

xviewport = xclipspace * ScreenWidth / 2 + ScreenWidth / 2

yviewport = -yclipspace * ScreenHeight / 2 + ScreenHeight / 2

This can be simplified to:

xviewport = (xclipspace + 1.0) * ScreenWidth / 2

yviewport = (1.0 - yclipspace ) * ScreenHeight / 2

The result represents the position on the screen. The y component need to be inverted because in world / view / projection space it increases in the opposite direction than in screen coordinates.

4. Because the result should be in texture space and not in screen space, the coordinates need to be transformed from clipping space to texture space. In other words from the range [-1.0, 1.0] to the range [0.0, 1.0].

u = (xclipspace + 1.0) * 1 / 2

v = (1.0 - yclipspace ) * 1 / 2

5. Due to the texturing algorithm used by Direct3D, we need to adjust texture coordinates by half a texel:

u = (xclipspace + 1.0) * ½ + ½ * TargetWidth

v = (1.0 - yclipspace ) * ½ + ½ * TargetHeight

Plugging in the x and y clipspace coordinates results from step 2:

u = (xproj / wproj + 1.0) * ½ + ½ * TargetWidth

v = (1.0 - yproj / wproj ) * ½ + ½ * TargetHeight

6. Because the final calculation of this equation should happen in the vertex shader results will be send down through the texture coordinate interpolator registers. Interpolating 1/ wproj is not the same as 1 / interpolated wproj. Therefore the term 1/ wproj needs to be extracted and applied in the pixel shader.

u = 1/ wproj * ((xproj + wproj) * ½ + ½ * TargetWidth * wproj)

v = 1/ wproj * ((wproj - yproj) * ½ + ½ * TargetHeight* wproj)

The vertex shader source code looks like this:

Float4 vPos = float4(0.5 * (float2(p.x + p.w, p.w – p.y) + p.w * inScreenDim.xy), pos.zw)

The equation without the half pixel offset would start at No. 4 like this:

u = (xclipspace + 1.0) * 1 / 2

v = (1.0 - yclipspace ) * 1 / 2

Plugging in the x and y clipspace coordinates results from step 2:

u = (xproj / wproj + 1.0) * ½

v = (1.0 - yproj / wproj ) * ½

Moving 1 / wproj to the front leads to:

u = 1/ wproj * ((xproj + wproj) * ½)

v = 1/ wproj * ((wproj - yproj) * ½)

Because the pixel shader is doing the 1 / wproj, this would lead to the following vertex shader code:

Float4 vPos = float4(0.5 * (float2(p.x + p.w, p.w – p.y)), pos.zw)

All this is based on a response of mikaelc in the following thread:

Lighting in a Deferred Renderer and a response by Frank Puig Placeres in the following thread:

Reconstructing Position from Depth Data

Sunday, September 7, 2008

Gauss Filter Kernel

OpenGL Bloom Tutorial

The interesting part is that he shows a way on how to generate the offset values and he also mentions a trick that I use for a long time. He reduces the filter kernel size by utilizing the hardware linear filtering. So he can go down from 5 to 3 taps. I usually use bilinear filtering to go down from 9 to 4 taps or 25 to 16 taps (with non-separable filter kernels) ... you got the idea.

Eric Haines just reminded me of the fact that this is also described in ShaderX2 - Tips and Tricks on page 451. You can find the -now free- book at

http://www.gamedev.net/reference/programming/features/shaderx2/Tips_and_Tricks_with_DirectX_9.pdf

BTW: Eric Haines contacted all the authors of this book to get permission to make it "open source". I would like to thank him for this.

Check out his blog at

http://www.realtimerendering.com/blog/

Monday, August 18, 2008

Beyond Programmable Shading

Here is the URL for the Larrabee day:

http://s08.idav.ucdavis.edu/

The talks are quite inspiring. I was hoping to see actual Larrabee hardware in action but they did not have any.

I liked Chas Boyd's DirectX 11 talk because he made it clear that there are different software designs for different applications and having looked into DirectX 11 now for a while it seems like there is a great API coming up soon that solves some of the outstanding issues we had with DirectX9 (DirectX 10 will be probably skipped by many in the industry).

The other thing that impressed me is AMD's CAL. The source code looks very elegant for the amount of performance you can unlock with it. Together with Brook+ it lets you control a huge number of cards. It seems like Cuda will be able to easier handle many GPUs at once soon too. PostFX are a good candidate for those APIs. CAL and CUDA can live in harmony with DirectX9/10 and DirectX 11 will even have a compute shader model that is the equivalent to CAL and CUDA. Compute shaders are written in HLSL … so a consistent environment.

Thursday, July 31, 2008

ARM Assembly

Tuesday, July 29, 2008

PostFX - The Nx-Gen Approach

On my main target platforms (360 and PS3) it will be hard to squeeze out more performance. There is probably lots of room in everything related to HDR but overall I wouldn't expect any fundamental changes. The main challenge with the pipeline was not on a technical level, but to explain to the artists how they can use it. Especially the tone mapping functionality was hard to explain and it was also hard to give them a starting point where they can work from.

So I am thinking about making it easier for the artists to use this pipeline. The main idea is to follow the camera paradigm. Most of the effects (HDR, Depth of Field, Motion Blur, color filters) of the pipeline are expected to mimic a real-world camera so why not make it use like a real-world camera?

The idea is to only expose functionality that is usually exposed by a camera and name all the sliders accordingly. Furthermore there will be different camera models with different basic properties as a starting point for the artists. It should also be possible to just switch between those on the fly. So a whole group of properties changes on the flip of a switch. This should make it easier to use cameras for cut scenes etc.

iPhone development - Oolong Engine

With some intial help from a friend (thank you Andrew :-)) I wrote the initial version of the Oolong engine and had lots of fun figuring out what is possible on the platform and what not. Then at some point Steve Jobs surprised us with the announcement that there will be an SDK and judging from Apple's history I was believing that they probably won't allow to develop games for the platform.

So now that we have an official SDK I am surprised how my initial small scale geek project turned out :-) ... suddenly I am the maintainer of a small little engine that is used in several productions.

Light Pre-Pass - First Blood :-)

To recap: what ends up in the four channels of the light buffer for a point light is the following:

Diffuse.r * N.L * Att | Diffuse.g * N.L * Att | Diffuse.b * N.L * Att | N.H^n * N.L * Att

So n represents the shininess value of the light source. My original idea to apply now different specular values in the forward rendering pass later was to divide by N.L * Att like this:

(N.H^n * N.L * Att) \ (N.L * Att)

This way I would have re-constructed the N.H^n term and I could easily do something like this:

(N.H^n)^mn

where mn represents the material specular. Unfortunately this requires to store the N.L * Att term in a separate render target channel. The more obvious way to deal with it is to just do this:

(N.H^n * N.L * Att)^mn

... maybe not quite right but it looks good enough for what I would want to achieve.

Friday, June 13, 2008

Stable Cascaded Shadow Maps

What I also like about it is the ShaderX idea. I wrote an article in ShaderX5 describing a first implementation (.... btw. I re-wrote that three times since than), Michal picks up from there and brings it to the next level.

There will be now a ShaderX7 article in which I will describe a slight improvement to Michal's approach. Michal picks the right shadow map with a rather cool trick. Mine is a bit different but it might be more efficient. So what I do to pick the right map is send down the sphere that is constructed for the light view frustum. I then check if the pixel is in the sphere. If it is I pick that shadow map, if it isn't I go to the next sphere. I also early out if it is not in a sphere by returning white.

At first sight it does not look like a trick but if you think about the spheres lined up along the view frustum and the way they intersect, it is actually pretty efficient and fast.

On my target platforms, especially on the one that Michal likes a lot, this makes a difference.

Thursday, June 12, 2008

Screen-Space Global Illumination

When combined with a Light Pre-Pass renderer, there is the light buffer with all the N.L * Att values that can be used as intensity and then there is the end-result of opaque rendering pass and we have a normal map lying around. Doing the light bounce along the normal and using the N.L*Att entry in the light buffer as intensity should do the trick. The way the values are fetched would be similar to SSAO.

Wednesday, May 28, 2008

UCSD Talk on Light Pre-Pass Renderer

Pat Wilson from Garagegames is sharing his findings with me. He came up with an interesting way to store LUV colors.

Renaldas Zioma told me that a similar idea was used in Battlezone 2.

This is exciting :-)

The link to the slides is at the end of the March 16th post.

Thursday, May 15, 2008

DX 10 Graphics demo skeleton

http://code.google.com/p/graphicsdemoskeleton/

What is it: it is just a minimum skeleton to start creating your own small-size apps with DX10. At some point I had a particle system running in 1.5kb this way (that was with DX9). If you think about the concept of small exes there is one interesting thing I figured out. When I use DX9 and I compile HLSL shader code to a header file and include it to use it, it is smaller than the equivalent C code. So what I was thinking was: hey let's write a math library in HLSL and use the CPU only with the stub code to launch everything and let it run on the GPU :-)

Tuesday, April 29, 2008

Today is the day: GTA IV is released

Monday, April 21, 2008

RGB -> XYZ conversion

http://www.w3.org/Graphics/Color/sRGB

They use

// 0.4125 0.3576 0.1805

// 0.2126 0.7152 0.0722

// 0.0193 0.1192 0.9505

to convert from RGB to XYZ and

// 3.2410 -1.5374 -0.4986

// -0.9692 1.8760 0.0416

// 0.0556 -0.2040 1.0570

to convert back.

Here is how I do it:

const FLOAT3x3 RGB2XYZ = {0.5141364, 0.3238786, 0.16036376,

0.265068, 0.67023428, 0.06409157,

0.0241188, 0.1228178, 0.84442666};

Here is how I convert back:

const float3x3 XYZ2RGB = { 2.5651,-1.1665,-0.3986,

-1.0217, 1.9777, 0.0439,

0.0753, -0.2543, 1.1892};

You should definitely try out different ways to do this :-)

Monday, April 14, 2008

Ported my iPhone Engine to OS 2.0

http://www.oolongengine.com

My main development device is still a iPod touch because I am worried about not being able to make phone calls anymore.

Tuesday, April 8, 2008

Accepted for the iPhone Developer Program

Tuesday, March 25, 2008

Some Great Links

I did not know that you can setup a virtual Cell chip on your PC. This course looks interesting:

http://www.cc.gatech.edu/~bader/CellProgramming.html

John Ratcliff's Code Suppository is a great place to find fantastic code snippets:

http://www.codesuppository.blogspot.com/

Here is a great paper to help with first steps in multi-core programming:

http://www.digra.org/dl/db/06278.34239.pdf

A general graphics programming course is available here:

http://users.ece.gatech.edu/~lanterma/mpg/

I will provide this URL to people who ask me about how to learn graphics programming.

Sunday, March 16, 2008

Light Pre-Pass Renderer

The idea is to fill up a Z buffer first and also store normals in a render target. This is like a G-Buffer with normals and Z values ... so compared to a deferred renderer there is no diffuse color, specular color, material index or position data stored in this stage.

Next the light buffer is filled up with light properties. So the idea is to differ between light and material properties. If you look at a simplified light equation for one point light it looks like this:

Color = Ambient + Shadow * Att * (N.L * DiffColor * DiffIntensity * LightColor + R.V^n * SpecColor * SpecIntensity * LightColor)

The light properties are:

- N.L

- LightColor

- R.V^n

- Attenuation

So what you can do is instead of rendering a whole lighting equation for each light into a render target, you render into a 8:8:8:8 render target only the light properties. You have four channels so you can render:

LightColor.r * N.L * Att

LightColor.g * N.L * Att

LightColor.b * N.L * Att

R.V^n * N.L * Att

That means in this setup there is no dedicated specular color ... which is on purpose (you can extend it easily).

Here is the source code what I store in the light buffer.

half4 ps_main( PS_INPUT Input ) : COLOR

{

half4 G_Buffer = tex2D( G_Buffer, Input.texCoord );

// Compute pixel position

half Depth = UnpackFloat16( G_Buffer.zw );

float3 PixelPos = normalize(Input.EyeScreenRay.xyz) * Depth;

// Compute normal

half3 Normal;

Normal.xy = G_Buffer.xy*2-1;

Normal.z = -sqrt(1-dot(Normal.xy,Normal.xy));

// Computes light attenuation and direction

float3 LightDir = (Input.LightPos - PixelPos)*InvSqrLightRange;

half Attenuation = saturate(1-dot(LightDir / LightAttenuation_0, LightDir / LightAttenuation_0));

LightDir = normalize(LightDir);

// R.V == Phong

float specular = pow(saturate(dot(reflect(normalize(-float3(0.0, 1.0, 0.0)), Normal), LightDir)), SpecularPower_0);

float NL = dot(LightDir, Normal)*Attenuation;

return float4(DiffuseLightColor_0.x*NL, DiffuseLightColor_0.y*NL, DiffuseLightColor_0.z*NL, specular * NL);

}

After all lights are alpha-blended into the light buffer, you switch to forward rendering and reconstruct the lighting equation. In its simplest form this might look like this

float4 ps_main( PS_INPUT Input ) : COLOR0

{

float4 Light = tex2D( Light_Buffer, Input.texCoord );

float3 NLATTColor = float3(Light.x, Light.y, Light.z);

float3 Lighting = NLATTColor + Light.www;

return float4(Lighting, 1.0f);

}

This is a direct competitor to the light indexed renderer idea described by Damian Trebilco at Paper .

I have a small example program that compares this approach to a deferred renderer but I have not compared it to Damian's approach. I believe his approach might be more flexible regarding a material system than mine but the Light Pre-Pass renderer does not need to do the indexing. It should even run on a seven year old ATI RADEON 8500 because you only have to do a Z pre-pass and store the normals upfront.

The following screenshot shows four point-lights. There is no restriction in the number of light sources:

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The design here is very flexible and scalable. So I expect people to start from here and end up with quite different results. One of the challenges with this approach is to setup a good material system. You can store different values in the light buffer or use the values above and construct interesting materials. For example a per-object specular highlight would be done by taking the value stored in the alpha channel and apply a power function to it or you store the power value in a different channel.

Obviously my intial approach is only scratching the surface of the possibilities.

P.S: to implement a material system for this you can do two things: you can handle it like in a deferred renderer by storing a material id with the normal map ... maybe in the alpha channel, or you can reconstruct the diffuse and specular term in the forward rendering pass. The only thing you have to store to do this is N.L * Att in a separate channel. This way you can get back R.V^n by using the specular channel and dividing it by N.L * Att. So what you do is:

(R.V^n * N.L * Att) / (N.L * Att)

Those are actually values that represent all light sources.

Here is a link to the slides of my UCSD Renderer Design presentation. They provide more detail.

Friday, March 7, 2008

iPhone SDK so far

Now I have to wait until I get access to the iPhone OS 2.0 ... what a pain.

Sunday, March 2, 2008

Predicting the Future in Game Graphics

HDR

Rendering with high-dynamic range is realized in two areas: in the renderer and in the source data == textures of objects

On current high-end platforms people run the lighting in the renderer in gamma 1.0 and they are using the 10:10:10:2 format whereever available or a 8:8:8:8 render target format that uses a non-standard color format that supports a larger range of values (> 1) and more deltas. Typically these are the LogLuv or L16uv color formats.

There are big developements for the source art. id Software published an article on a 8-bit per pixel color format -stored in a DXT5 format- that has a much better quality than the DXT1 format with 4-bit per pixel that we usually use. Before that there were numerous attempts by using scale and bias values in the hacked DXT header to use the available deltas in the texture better for -e.g.- rather dark textures. One of the challenges here was to make all this work with gamma 1.0.

On GDC 2007 I suggested during Chas. Boyds DirectX Future talk to extend DX to support a HDR format with 8-bit that also supports gamma 1.0 better. It would be great if they could come up with a better compression scheme than DXT in the future but until then we will try to make combinations of DXT1 + L16 or DXT5 hacks scenarios work :-) or utilize id Software's solution.

Normal Map Data

Some of the most expensive data is normal map data. So far we are "mis-using" the DXT formats to compress vector data. If you generally store height data this opens up more options. Many future techniques like Parallax mapping or any "normal map blending" require height map data. So this is some area of practical interest :-) ... check out the normal vector talk of the GDC 2008 tutorial day I organized at http://www.coretechniques.info/.

Lighting Models

Everyone is trying to find lighting models that allow to mimic a wider range of materials. The Strauss lighting model seems to be popular and some people come up with their own lighting models.

Renderer Design

To render opaque objects there are currently two renderer designs on the end of the spectrum. The so called deferred renderer and the so called forward renderer. The idea of the deferred renderer design came up to allow a higher number of lights. The advantage of a higher number of lights has to be bought by having lower quality settings in other areas.

So people now start to research new renderer designs that have the advantages of both designs but none of the disadvantages. There is a Light indexed renderer and I am working on a Light pre-pass renderer. New renderer designs will allow more light sources ... but what is a light source without shadow? ...

Shadows

Lots of progress was made with shadows. Cascaded Shadow maps are now the favorite way to split up shadow data along the view frustum. Nevertheless there are more ways to distribute the shadow resolution. This is an interesting area of research.

The other big area is using probability functions to replace the binary depth comparison. Then the next big thing will be soft shadows that become softer when the distance between the occluder and the receiver becomes bigger.

Global Illumination

This is the area with the biggest growth potential currently in games :-) Like screen-space ambient occlusion that is super popular now because of Crysis, screen-space irradiance will offer lots of research opportunities.

To target more advanced hardware, Carsten Dachsbacher approach in ShaderX5 looks to me like a great starting point.

Wednesday, February 13, 2008

Android

-----------------

Can I write code for Android using C/C++?

No. Android applications are written using the Java programming language.

-----------------

So no easy game ports for this platform. Additionally the language will eat up so many cycles that good looking 3D game do not make much sense. No business case for games then ... maybe they will start thinking about it :-) ... using Java also looks quite unprofessional to me but I heard other phone companies are doing this as well to protect their margin and keep control over the device.

Friday, January 18, 2008

gDEbugger for OpenGL ES

I guess with the decreasing market of OpenGL there is not much money in providing a debugger for this API. In games, less companies do PC games anymore and OpenGL is not used by any AAA title anymore on the main PC platform Windows.

I was hoping that they target the upcoming OpenGL ES market, but this might be still in its infanty. If anyone knows a tool to debug OpenGL ES similar to PIX or GcmReplay, I would appreciate a hint. To debug I would work on the PC platform ... in other words I have a PC and an iPhone version of the game :-)

Update January 19th: graphicRemedy actually came back to me and asked me to send in source code ... very nice.

Tuesday, January 8, 2008

San Angeles Observation on the iPhone

http://iki.fi/jetro/

This demo throws about 60k polys onto the iPhone and runs quite slow :-(. I will double check with Apple that this is not due to a lame OpenGL ES implementation on the phone.

I am thinking about porting other stuff now over to the phone or working on getting a more mature feature set for the engine ... porting is nearly more fun, because you can show a result afterwards :-) ... let's see.