I just came accross some cool links today while looking for material that shows multi-core programming and how to generate an indexed triangle list from a triangle soup.

I did not know that you can setup a virtual Cell chip on your PC. This course looks interesting:

http://www.cc.gatech.edu/~bader/CellProgramming.html

John Ratcliff's Code Suppository is a great place to find fantastic code snippets:

http://www.codesuppository.blogspot.com/

Here is a great paper to help with first steps in multi-core programming:

http://www.digra.org/dl/db/06278.34239.pdf

A general graphics programming course is available here:

http://users.ece.gatech.edu/~lanterma/mpg/

I will provide this URL to people who ask me about how to learn graphics programming.

Tuesday, March 25, 2008

Sunday, March 16, 2008

Light Pre-Pass Renderer

In June last year I had an idea for a new rendering design. I call it light pre-pass renderer.

The idea is to fill up a Z buffer first and also store normals in a render target. This is like a G-Buffer with normals and Z values ... so compared to a deferred renderer there is no diffuse color, specular color, material index or position data stored in this stage.

Next the light buffer is filled up with light properties. So the idea is to differ between light and material properties. If you look at a simplified light equation for one point light it looks like this:

Color = Ambient + Shadow * Att * (N.L * DiffColor * DiffIntensity * LightColor + R.V^n * SpecColor * SpecIntensity * LightColor)

The light properties are:

- N.L

- LightColor

- R.V^n

- Attenuation

So what you can do is instead of rendering a whole lighting equation for each light into a render target, you render into a 8:8:8:8 render target only the light properties. You have four channels so you can render:

LightColor.r * N.L * Att

LightColor.g * N.L * Att

LightColor.b * N.L * Att

R.V^n * N.L * Att

That means in this setup there is no dedicated specular color ... which is on purpose (you can extend it easily).

Here is the source code what I store in the light buffer.

half4 ps_main( PS_INPUT Input ) : COLOR

{

half4 G_Buffer = tex2D( G_Buffer, Input.texCoord );

// Compute pixel position

half Depth = UnpackFloat16( G_Buffer.zw );

float3 PixelPos = normalize(Input.EyeScreenRay.xyz) * Depth;

// Compute normal

half3 Normal;

Normal.xy = G_Buffer.xy*2-1;

Normal.z = -sqrt(1-dot(Normal.xy,Normal.xy));

// Computes light attenuation and direction

float3 LightDir = (Input.LightPos - PixelPos)*InvSqrLightRange;

half Attenuation = saturate(1-dot(LightDir / LightAttenuation_0, LightDir / LightAttenuation_0));

LightDir = normalize(LightDir);

// R.V == Phong

float specular = pow(saturate(dot(reflect(normalize(-float3(0.0, 1.0, 0.0)), Normal), LightDir)), SpecularPower_0);

float NL = dot(LightDir, Normal)*Attenuation;

return float4(DiffuseLightColor_0.x*NL, DiffuseLightColor_0.y*NL, DiffuseLightColor_0.z*NL, specular * NL);

}

After all lights are alpha-blended into the light buffer, you switch to forward rendering and reconstruct the lighting equation. In its simplest form this might look like this

float4 ps_main( PS_INPUT Input ) : COLOR0

{

float4 Light = tex2D( Light_Buffer, Input.texCoord );

float3 NLATTColor = float3(Light.x, Light.y, Light.z);

float3 Lighting = NLATTColor + Light.www;

return float4(Lighting, 1.0f);

}

This is a direct competitor to the light indexed renderer idea described by Damian Trebilco at Paper .

I have a small example program that compares this approach to a deferred renderer but I have not compared it to Damian's approach. I believe his approach might be more flexible regarding a material system than mine but the Light Pre-Pass renderer does not need to do the indexing. It should even run on a seven year old ATI RADEON 8500 because you only have to do a Z pre-pass and store the normals upfront.

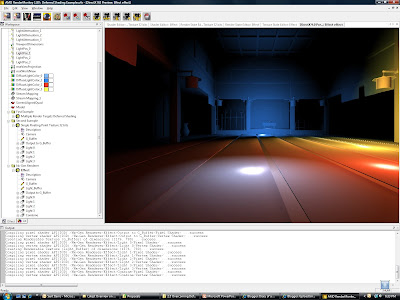

The following screenshot shows four point-lights. There is no restriction in the number of light sources:

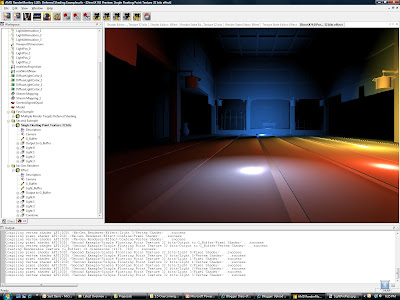

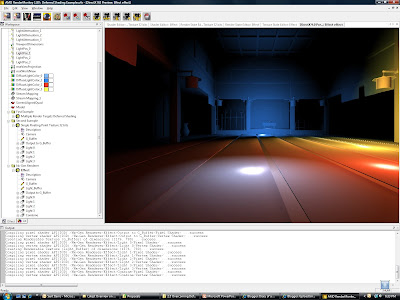

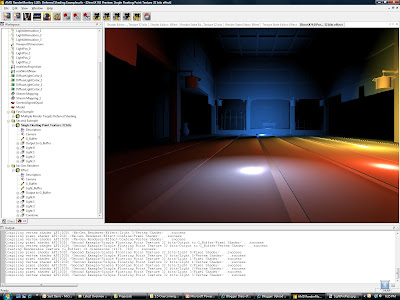

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The design here is very flexible and scalable. So I expect people to start from here and end up with quite different results. One of the challenges with this approach is to setup a good material system. You can store different values in the light buffer or use the values above and construct interesting materials. For example a per-object specular highlight would be done by taking the value stored in the alpha channel and apply a power function to it or you store the power value in a different channel.

Obviously my intial approach is only scratching the surface of the possibilities.

P.S: to implement a material system for this you can do two things: you can handle it like in a deferred renderer by storing a material id with the normal map ... maybe in the alpha channel, or you can reconstruct the diffuse and specular term in the forward rendering pass. The only thing you have to store to do this is N.L * Att in a separate channel. This way you can get back R.V^n by using the specular channel and dividing it by N.L * Att. So what you do is:

(R.V^n * N.L * Att) / (N.L * Att)

Those are actually values that represent all light sources.

Here is a link to the slides of my UCSD Renderer Design presentation. They provide more detail.

The idea is to fill up a Z buffer first and also store normals in a render target. This is like a G-Buffer with normals and Z values ... so compared to a deferred renderer there is no diffuse color, specular color, material index or position data stored in this stage.

Next the light buffer is filled up with light properties. So the idea is to differ between light and material properties. If you look at a simplified light equation for one point light it looks like this:

Color = Ambient + Shadow * Att * (N.L * DiffColor * DiffIntensity * LightColor + R.V^n * SpecColor * SpecIntensity * LightColor)

The light properties are:

- N.L

- LightColor

- R.V^n

- Attenuation

So what you can do is instead of rendering a whole lighting equation for each light into a render target, you render into a 8:8:8:8 render target only the light properties. You have four channels so you can render:

LightColor.r * N.L * Att

LightColor.g * N.L * Att

LightColor.b * N.L * Att

R.V^n * N.L * Att

That means in this setup there is no dedicated specular color ... which is on purpose (you can extend it easily).

Here is the source code what I store in the light buffer.

half4 ps_main( PS_INPUT Input ) : COLOR

{

half4 G_Buffer = tex2D( G_Buffer, Input.texCoord );

// Compute pixel position

half Depth = UnpackFloat16( G_Buffer.zw );

float3 PixelPos = normalize(Input.EyeScreenRay.xyz) * Depth;

// Compute normal

half3 Normal;

Normal.xy = G_Buffer.xy*2-1;

Normal.z = -sqrt(1-dot(Normal.xy,Normal.xy));

// Computes light attenuation and direction

float3 LightDir = (Input.LightPos - PixelPos)*InvSqrLightRange;

half Attenuation = saturate(1-dot(LightDir / LightAttenuation_0, LightDir / LightAttenuation_0));

LightDir = normalize(LightDir);

// R.V == Phong

float specular = pow(saturate(dot(reflect(normalize(-float3(0.0, 1.0, 0.0)), Normal), LightDir)), SpecularPower_0);

float NL = dot(LightDir, Normal)*Attenuation;

return float4(DiffuseLightColor_0.x*NL, DiffuseLightColor_0.y*NL, DiffuseLightColor_0.z*NL, specular * NL);

}

After all lights are alpha-blended into the light buffer, you switch to forward rendering and reconstruct the lighting equation. In its simplest form this might look like this

float4 ps_main( PS_INPUT Input ) : COLOR0

{

float4 Light = tex2D( Light_Buffer, Input.texCoord );

float3 NLATTColor = float3(Light.x, Light.y, Light.z);

float3 Lighting = NLATTColor + Light.www;

return float4(Lighting, 1.0f);

}

This is a direct competitor to the light indexed renderer idea described by Damian Trebilco at Paper .

I have a small example program that compares this approach to a deferred renderer but I have not compared it to Damian's approach. I believe his approach might be more flexible regarding a material system than mine but the Light Pre-Pass renderer does not need to do the indexing. It should even run on a seven year old ATI RADEON 8500 because you only have to do a Z pre-pass and store the normals upfront.

The following screenshot shows four point-lights. There is no restriction in the number of light sources:

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The design here is very flexible and scalable. So I expect people to start from here and end up with quite different results. One of the challenges with this approach is to setup a good material system. You can store different values in the light buffer or use the values above and construct interesting materials. For example a per-object specular highlight would be done by taking the value stored in the alpha channel and apply a power function to it or you store the power value in a different channel.

Obviously my intial approach is only scratching the surface of the possibilities.

P.S: to implement a material system for this you can do two things: you can handle it like in a deferred renderer by storing a material id with the normal map ... maybe in the alpha channel, or you can reconstruct the diffuse and specular term in the forward rendering pass. The only thing you have to store to do this is N.L * Att in a separate channel. This way you can get back R.V^n by using the specular channel and dividing it by N.L * Att. So what you do is:

(R.V^n * N.L * Att) / (N.L * Att)

Those are actually values that represent all light sources.

Here is a link to the slides of my UCSD Renderer Design presentation. They provide more detail.

Friday, March 7, 2008

iPhone SDK so far

Just setup a dev environment this morning with the iPhone SDK ... overall it is quite disappointing for games :-). OpenGL ES is not supported in the emulator but you can't run apps on the iPhone without OS 2.0 ... and this is not realeased so far. In other words, they have OpenGL ES examples but you can't run them anywhere. I hope I get access to the 2.0 file system somehow. Other than this I have now the old and the new SDK setup on one machine and it works nicely.

Now I have to wait until I get access to the iPhone OS 2.0 ... what a pain.

Now I have to wait until I get access to the iPhone OS 2.0 ... what a pain.

Sunday, March 2, 2008

Predicting the Future in Game Graphics

So I was thinking about the next 1 - 2 years of graphics programming in the game industry on the XBOX 360 and the PS3. I think we can see a few very strong trends that will sustain over the next few years.

HDR

Rendering with high-dynamic range is realized in two areas: in the renderer and in the source data == textures of objects

On current high-end platforms people run the lighting in the renderer in gamma 1.0 and they are using the 10:10:10:2 format whereever available or a 8:8:8:8 render target format that uses a non-standard color format that supports a larger range of values (> 1) and more deltas. Typically these are the LogLuv or L16uv color formats.

There are big developements for the source art. id Software published an article on a 8-bit per pixel color format -stored in a DXT5 format- that has a much better quality than the DXT1 format with 4-bit per pixel that we usually use. Before that there were numerous attempts by using scale and bias values in the hacked DXT header to use the available deltas in the texture better for -e.g.- rather dark textures. One of the challenges here was to make all this work with gamma 1.0.

On GDC 2007 I suggested during Chas. Boyds DirectX Future talk to extend DX to support a HDR format with 8-bit that also supports gamma 1.0 better. It would be great if they could come up with a better compression scheme than DXT in the future but until then we will try to make combinations of DXT1 + L16 or DXT5 hacks scenarios work :-) or utilize id Software's solution.

Normal Map Data

Some of the most expensive data is normal map data. So far we are "mis-using" the DXT formats to compress vector data. If you generally store height data this opens up more options. Many future techniques like Parallax mapping or any "normal map blending" require height map data. So this is some area of practical interest :-) ... check out the normal vector talk of the GDC 2008 tutorial day I organized at http://www.coretechniques.info/.

Lighting Models

Everyone is trying to find lighting models that allow to mimic a wider range of materials. The Strauss lighting model seems to be popular and some people come up with their own lighting models.

Renderer Design

To render opaque objects there are currently two renderer designs on the end of the spectrum. The so called deferred renderer and the so called forward renderer. The idea of the deferred renderer design came up to allow a higher number of lights. The advantage of a higher number of lights has to be bought by having lower quality settings in other areas.

So people now start to research new renderer designs that have the advantages of both designs but none of the disadvantages. There is a Light indexed renderer and I am working on a Light pre-pass renderer. New renderer designs will allow more light sources ... but what is a light source without shadow? ...

Shadows

Lots of progress was made with shadows. Cascaded Shadow maps are now the favorite way to split up shadow data along the view frustum. Nevertheless there are more ways to distribute the shadow resolution. This is an interesting area of research.

The other big area is using probability functions to replace the binary depth comparison. Then the next big thing will be soft shadows that become softer when the distance between the occluder and the receiver becomes bigger.

Global Illumination

This is the area with the biggest growth potential currently in games :-) Like screen-space ambient occlusion that is super popular now because of Crysis, screen-space irradiance will offer lots of research opportunities.

To target more advanced hardware, Carsten Dachsbacher approach in ShaderX5 looks to me like a great starting point.

HDR

Rendering with high-dynamic range is realized in two areas: in the renderer and in the source data == textures of objects

On current high-end platforms people run the lighting in the renderer in gamma 1.0 and they are using the 10:10:10:2 format whereever available or a 8:8:8:8 render target format that uses a non-standard color format that supports a larger range of values (> 1) and more deltas. Typically these are the LogLuv or L16uv color formats.

There are big developements for the source art. id Software published an article on a 8-bit per pixel color format -stored in a DXT5 format- that has a much better quality than the DXT1 format with 4-bit per pixel that we usually use. Before that there were numerous attempts by using scale and bias values in the hacked DXT header to use the available deltas in the texture better for -e.g.- rather dark textures. One of the challenges here was to make all this work with gamma 1.0.

On GDC 2007 I suggested during Chas. Boyds DirectX Future talk to extend DX to support a HDR format with 8-bit that also supports gamma 1.0 better. It would be great if they could come up with a better compression scheme than DXT in the future but until then we will try to make combinations of DXT1 + L16 or DXT5 hacks scenarios work :-) or utilize id Software's solution.

Normal Map Data

Some of the most expensive data is normal map data. So far we are "mis-using" the DXT formats to compress vector data. If you generally store height data this opens up more options. Many future techniques like Parallax mapping or any "normal map blending" require height map data. So this is some area of practical interest :-) ... check out the normal vector talk of the GDC 2008 tutorial day I organized at http://www.coretechniques.info/.

Lighting Models

Everyone is trying to find lighting models that allow to mimic a wider range of materials. The Strauss lighting model seems to be popular and some people come up with their own lighting models.

Renderer Design

To render opaque objects there are currently two renderer designs on the end of the spectrum. The so called deferred renderer and the so called forward renderer. The idea of the deferred renderer design came up to allow a higher number of lights. The advantage of a higher number of lights has to be bought by having lower quality settings in other areas.

So people now start to research new renderer designs that have the advantages of both designs but none of the disadvantages. There is a Light indexed renderer and I am working on a Light pre-pass renderer. New renderer designs will allow more light sources ... but what is a light source without shadow? ...

Shadows

Lots of progress was made with shadows. Cascaded Shadow maps are now the favorite way to split up shadow data along the view frustum. Nevertheless there are more ways to distribute the shadow resolution. This is an interesting area of research.

The other big area is using probability functions to replace the binary depth comparison. Then the next big thing will be soft shadows that become softer when the distance between the occluder and the receiver becomes bigger.

Global Illumination

This is the area with the biggest growth potential currently in games :-) Like screen-space ambient occlusion that is super popular now because of Crysis, screen-space irradiance will offer lots of research opportunities.

To target more advanced hardware, Carsten Dachsbacher approach in ShaderX5 looks to me like a great starting point.

Subscribe to:

Comments (Atom)