The idea is to fill up a Z buffer first and also store normals in a render target. This is like a G-Buffer with normals and Z values ... so compared to a deferred renderer there is no diffuse color, specular color, material index or position data stored in this stage.

Next the light buffer is filled up with light properties. So the idea is to differ between light and material properties. If you look at a simplified light equation for one point light it looks like this:

Color = Ambient + Shadow * Att * (N.L * DiffColor * DiffIntensity * LightColor + R.V^n * SpecColor * SpecIntensity * LightColor)

The light properties are:

- N.L

- LightColor

- R.V^n

- Attenuation

So what you can do is instead of rendering a whole lighting equation for each light into a render target, you render into a 8:8:8:8 render target only the light properties. You have four channels so you can render:

LightColor.r * N.L * Att

LightColor.g * N.L * Att

LightColor.b * N.L * Att

R.V^n * N.L * Att

That means in this setup there is no dedicated specular color ... which is on purpose (you can extend it easily).

Here is the source code what I store in the light buffer.

half4 ps_main( PS_INPUT Input ) : COLOR

{

half4 G_Buffer = tex2D( G_Buffer, Input.texCoord );

// Compute pixel position

half Depth = UnpackFloat16( G_Buffer.zw );

float3 PixelPos = normalize(Input.EyeScreenRay.xyz) * Depth;

// Compute normal

half3 Normal;

Normal.xy = G_Buffer.xy*2-1;

Normal.z = -sqrt(1-dot(Normal.xy,Normal.xy));

// Computes light attenuation and direction

float3 LightDir = (Input.LightPos - PixelPos)*InvSqrLightRange;

half Attenuation = saturate(1-dot(LightDir / LightAttenuation_0, LightDir / LightAttenuation_0));

LightDir = normalize(LightDir);

// R.V == Phong

float specular = pow(saturate(dot(reflect(normalize(-float3(0.0, 1.0, 0.0)), Normal), LightDir)), SpecularPower_0);

float NL = dot(LightDir, Normal)*Attenuation;

return float4(DiffuseLightColor_0.x*NL, DiffuseLightColor_0.y*NL, DiffuseLightColor_0.z*NL, specular * NL);

}

After all lights are alpha-blended into the light buffer, you switch to forward rendering and reconstruct the lighting equation. In its simplest form this might look like this

float4 ps_main( PS_INPUT Input ) : COLOR0

{

float4 Light = tex2D( Light_Buffer, Input.texCoord );

float3 NLATTColor = float3(Light.x, Light.y, Light.z);

float3 Lighting = NLATTColor + Light.www;

return float4(Lighting, 1.0f);

}

This is a direct competitor to the light indexed renderer idea described by Damian Trebilco at Paper .

I have a small example program that compares this approach to a deferred renderer but I have not compared it to Damian's approach. I believe his approach might be more flexible regarding a material system than mine but the Light Pre-Pass renderer does not need to do the indexing. It should even run on a seven year old ATI RADEON 8500 because you only have to do a Z pre-pass and store the normals upfront.

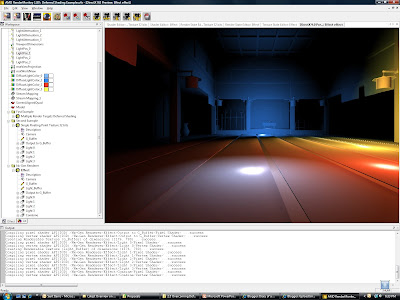

The following screenshot shows four point-lights. There is no restriction in the number of light sources:

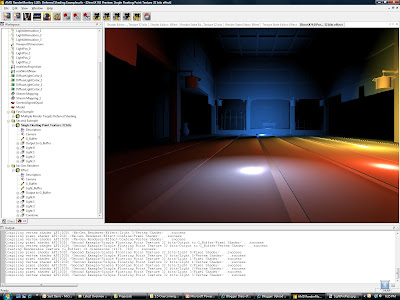

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The design here is very flexible and scalable. So I expect people to start from here and end up with quite different results. One of the challenges with this approach is to setup a good material system. You can store different values in the light buffer or use the values above and construct interesting materials. For example a per-object specular highlight would be done by taking the value stored in the alpha channel and apply a power function to it or you store the power value in a different channel.

Obviously my intial approach is only scratching the surface of the possibilities.

P.S: to implement a material system for this you can do two things: you can handle it like in a deferred renderer by storing a material id with the normal map ... maybe in the alpha channel, or you can reconstruct the diffuse and specular term in the forward rendering pass. The only thing you have to store to do this is N.L * Att in a separate channel. This way you can get back R.V^n by using the specular channel and dividing it by N.L * Att. So what you do is:

(R.V^n * N.L * Att) / (N.L * Att)

Those are actually values that represent all light sources.

Here is a link to the slides of my UCSD Renderer Design presentation. They provide more detail.

Your screenshots/equations miss an albedo term; however, it's essential - introducing it into equation means either extending "G-buffer" or rendering into light buffer with a separate geometry pass, which kinda defeats the purpose (?). Same thing goes for i.e. specular texture.

ReplyDeleteAlso I don't see how you can add separate specular COLOR easily - you will either require MRT or decrease the quality of lighting (of course if you have access to albedo in light shader, you can do it by outputting final color in light pass). Adding intensity is trivial though - again, unless it's texture-controlled.

Arseny Kapoulkine : I don't think you understand what he is doing - rendering consists of 3 passes:

ReplyDelete1) Render depth + normals

2) Render lights into light texture

3) Render main geometry (with full texturing etc) accessing the light texture.

So yes there is two passes over the scene geometry.

When "Light Pre-Pass Renderer" was mentioned, this is kinda what I thought it would turn out to be. I almost went down this route (see top of page 4) but doing a normal pre-pass was not possible with what I was using it for.

Good work - I like it a lot. I may even see if I can update my demo to compare performance. (I suspect your method will be faster and have less artifacts as LIDR suffers when too many lights overlap)

Hey Damian,

ReplyDeletethanks a lot! This would be awesome to see it in your demo.

- Wolfgang

Hi,

ReplyDeleteThis is quite interesting, I'll try it when I have my new computer. Just, there is a typo here : float3 Lighting = NLATTColor + Light.www;

I think it's Light.w instead of Light.www :)

In effect, it seems more scalable, as I only save depth + normals into only two textures (one texture for normals, and one renderbuffer, as I don't know if I can put the depth into the first texture). It's pretty less than normal deferred rendering, and if I understand well, I apply then a normal forward rendering...

Unfortunately, I think it can't be used in my case, but maybe I'm wrong and you can help me :).

I'm using a simple instant radiosity method. Using deferred rendering, the algorithm is :

1) Render the scene from the camera (G-Buffer : positions, normals, colors)

2) Render the scene from the spot light (Save into textures : positions, normals).

So the lights textures contains all the points visible from the spot light point of view.

Then, in the CPU, I place all my secondary lights (and the colors stored in the G-Buffer is the color of the light.

I do a first pass when I add the contributions for all the secondary lights, so I need a G-Buffer storing colors properties (because one point light's color = a color material).

I don't know if I'm clear, I'll be thinking of how I can adapt your method to my algorithm, because effectively, it sounds interesting, both in memory economy, and in scalability.

NB : a last thing, the screenshot of Pre-Pass looks a little blurrier than normal deferred rendering, is it normal?

If there is a second geometry pass involved, it's pretty obvious of course.

ReplyDeleteI like that one.

Damian, there should be no artifacts when a lot of lights overlap - the math is correct (i.e. exactly matches forward rendering solutions, given adequate render target precision).

A couple more notes.

ReplyDeleteFirst, it'll start to somewhat tear apart (converge to DS) in more complex cases - like, if you need to control specular power per-material (or even per-pixel?).

Second, this is not really specific to this method, but I figured this place is as good as any other to ask.

Why does your normal has a definite Z component sign? I mean, of course for a view space *face* normal of a non-culled triangle Z component sign is definite, but we're dealing with *vertex* normals - things like spheres with totally smooth normals will have possible artifacts on pixels near silhouettes, or this is completely wrong and I miss something trivial?

Arseny Kapoulkine : I meant my method - LIDR (Light index deferred rendering) - suffers from excessive light overlap issues.

ReplyDeleteYou latest addition of a material system using:

ReplyDelete(R.V^n * N.L * Att) / (N.L * Att)

makes no sense to me.

I mean if you have more than one light, that will not work?

(Actually I am not sure why you are multiplying R.V^n by N.L in the first place.)

I store for all lights

ReplyDeleteR.V^ * N.L * Att

and I store for all lights

N.L * Att in a separate channel.

After all lights are rendered into the light buffer, I can re-construct R.V^n for all lights per-pixel.

The reason why you store R.V^n * N.L * Att in the light buffer is because N.L and Att define the locality of the light.

Do you want me to send you my small example program? Just send me the e-mail address I should send it to. It is a simple RenderMonkey example.

ReplyDeleteSure, my email is on the top of my paper you linked to.

ReplyDeleteI still don't understand how that could work - but I will see what your code is doing.

eg.

a = R.V^n

b = N.L * Att

Two lights:

Channel1 = (a1 * b1) + (a2 * b2)

Channel2 = (b1 + b2)

Want a1 + a2

but ((a1 * b1) + (a2 * b2)) / (b1 + b2) is not a1 + a2.

But I think I am missing something....

Also I could understand R.V^n * Att , but my question is why is the N.L in there? Won't that soften the specular a lot?

Hi Damian,

ReplyDeleteN.L is a self-shadowing term in the specular component. You need this to avoid errors when light goes through geometry :-)

Wolfgang,

ReplyDeleteI like this technique compared with other methods of differed shading.

I put a (hacky) implementation into a game we recently launched to test the technique, and see how easily it could be integrated into an existing game. If you are interested, I uploaded some shots to Flickr:

http://www.flickr.com/photos/killerbunny/sets/72157604831928541/

Right now I am using an R16G16B16A16F to store the GBuffer and I would like to crunch that down to an R8G8B8A8, at some point.

Someone mentioned the assumption of a positive Z value:

A positive Z value can be assumed in tangent space. I am writing out the World-Space normals to the G-buffer so a negative Z value is possible. What I did to get around this was to multiply the depth value by the sign of the z component of the world-space normal during the Z-fill (Gbuffer) pass. Since eye-space Z is always positive, I strip the sign from the depth, and multiply the reconstructed Z component of the normal by the sign of the depth sample. I don't think this is an ideal solution, but it's what I'm doing currently.

Hey Pat, this is awesome :-) ... cool job. If you are interested you might send me your real name via e-mail and we can exchange some of the experience we made with the approach.

ReplyDeleteBattlefield2 used such approach to gather lighting for terrain and we later extended it for BF2: Special Forces onto static geometry and combined with adaptive shadow buffers (to reduce number of passes).

ReplyDeleteI wonder whether it is possible to combine this method with MSAA? I mean, doing "forward pass" with MSAA, while depth, normal and light accumulation without it, but maybe blurring light buffer along edges. At first this looked like it should work, but on the second thought it became apparent that artifacts alongside edges might appear. And using alpha-to-coverage can make situation even worse. Any ideas about possible workarounds?

ReplyDeleteP.S. I'll try it out anyways, the idea of deferred renderer capable of MSAA (and not requiring DX10.1) is very tempting :)

It works with MSAA ... definitely :-)

ReplyDeleteWould MSAA in this technique be possible with DirectX9 and (if you know) the XNA framework?

ReplyDeleteYes. MSAA is implemented in the same way as for a Z Pre-Pass renderer. If you need a more detailed explanation, I can send you the latest version of the ShaderX7 article I currently write.

ReplyDeletePat Wilson from Garagegames implemented this in their engine. I don't know if this was the XNA based engine ... but he might provide even more implementation details than I can.

ReplyDeleteHe is currently in vacation but I can forward you his e-mail address.

One way you can implement this is that you generate a dedicated depth buffer (assuming there is no depth buffer access in XNA) and a normal buffer with the standard resolution and then you render the light properties into the light buffer with a standard resolution. The chain of draw calls for opaque objects would be:

- Z Pre-Pass with Depth buffer in MSAA mode

- fill up special depth buffer and normal buffer (this might be one render target)

- fill up light buffer

- draw scene with MSAA'ed Depth buffer

I'd very much appreciate previewing the article. You can send it to kek_miyu at yahoo dot se (Sweden, not .com).

ReplyDeleteI guess part of my MSAA question is because in deferred rendering you would get edge artifacts if using a resolved MSAA g-buffer (and you can't use MRTs either with MSAA I think). I guess I need to do a bit more research, but is it possible to use a non-resolved MSAA g-buffer as shader input, or am I missing something? Can the g-buffer (light buffer) not be MSAA even though the forward renderer is rendering to a MSAA accumulation buffer (RT), and still avoid artifacts?

I was just in the starting blocks for a deferred renderer and I thought this technique seemed interesting as well (considering bandwidth and MSAA). Although, it seems that summing the specular half-vector factors won't give the same results for overlapping lights (as the specular power will be applied to the sum of half vector contributions and not the components of the sum), unless the specular power is available in a g-buffer when generating the light buffer. However, I imagine one could probably live with this overly specular behaviour for overlapping lights.

Anyways, great stuff and I look forward to reviewing the article!

We posted at the same time :)

ReplyDeleteLooking at your suggestion still makes me wonder. If the light buffer is not the same resolution as the accumulation buffer (i.e. MSAA increases effective resolution of accumulation buffer), would there not potentially be edge artifacts where the lights slightly bleed over the edges? But maybe it wouldn't be very noticable?

Regarding specular: if you want you can use specular in exactly the same way as with a Deferred Renderer. You store a material specular value in the normal buffer or depth buffer or even in the specular value of the MSAA'ed depth buffer. This extended specular factor is used to re-construct specular in the forward rendering == main pass, so that we can use a wider range of materials. This way you can implement skin, cloth and other materials better.

ReplyDeleteRegarding MSAA: I can't say how much artefacts the non-MSAA'ed light buffer will introduce because I didn't implemented it on a PC platform with MSAA (I wrote testers in RenderMonkey). I would expect those artefacts hard to notice.

This

ReplyDelete<<<

or even in the specular value of the MSAA'ed depth buffer.

<<<

should have been

stencil channel

We actually do this in one of our games that uses a Deferred Renderer.

I may have missed it, but I never saw how this problem mentioned by Damian is overcome/doesn't matter:

ReplyDelete>>

Two lights:

Channel1 = (a1 * b1) + (a2 * b2)

Channel2 = (b1 + b2)

Want a1 + a2

but ((a1 * b1) + (a2 * b2)) / (b1 + b2) is not a1 + a2.

>>

I did not consider this a problem out of several reasons:

ReplyDelete1. if you have -let's say more than 2 overlapping specular values- and you see artefacts, you might store your specular power value in the stencil buffer part of the depth buffer or somewhere else. You end up with the same solution that Deferred renderers use this way. Most of the time this is not a problem because you won't see more than 2 overlapping specular highlights.

Let's assume you see more than 2 overlapping specular highlights in your scene: then the mathematical foundation of the specular term is not your biggest problem. Your biggest problem is that your 8-bit channel won't be enough to hold any sensible representation of those three hightlights and you will hope for that the normal map is detailed that no one notices that for an instance your specular calculation overflowed your 8-bit channel and was at the same time not mathematically correct. Your best argument in that case is that you describe with a smile in your face that we are talking about a really rough approximation of a real-life phenomena that we were not able to represent in any meaningful way anyway. After all specular is represented in all games in a wrong way. So we skillfully added one more way on how to do it wrong and we are proud of it because we proved that graphics programmers can fake things cooler than real life :-)

2. If you can live with the fact that you use a high-end graphics card capable to calculate statistical data with 32-bit precision but still bound to calculate a term that was developed in 1977 by holding a thumb over one of your eyes long enough so that you loose any sense of perspective and color but see a sufficiently performant approximation of a real-life phenomen that hardly ever happens, you might also try the other term I mentioned:

(N.H^n * N.L * Att)^nm

Obviously the highlight will change its shape this way but I promise it won't be more worse than the difference between Blinn-Phong and Phong.

Ahh, yep. So you just ignore the inaccuracy. Fair enough!

ReplyDeleteIn a nutshell this is right. If you would render the specular in any other way you would have the same problems minus the re-construction problem that is introduced by this method.

ReplyDeleteObviously there is lots of room for more accurate specular models than Blinn-Phongs and if you want you can also implement a specular texture look-up by using the 8-bit channel as an index.

A nice extension of the Light Pre-Pass would be to use a better specular term that can be added and that represents more the real physical nature of specular.

Thinking of similar system,

ReplyDeleteso here is my idea for commenting.

Pass_Initial {

RenderTarget_01:

R16fG16fB16fA16f =

(RG) ScreenSpaceNormal

(B) Depth

(A) packed(A = 8bit+8bit)

( SpecularGlossness + DiffuseffuseRoughness )

}

Pass_ShadowMaps X+ {

Render all needed shadowMaps

(optimize using Pass_Initial,

as visible fragments are known?)

(extra "z" or "stencil"-buffer test ?)

}

Pass_LightContributions {

RenderTarget_01:

R16fG16fB16fA16f =

(RGB) combined light diffuse

(A = AntiAliasing Hint ?)

RenderTarget_02:

R16fG16fB16fA16f =

(RGB) combined light specular

(A = Unused ???)

}

Pass_FinalComposition {

RenderTarget_ScreenBuffer:

Forward Rendering of image

}

/Aki R.

Funnily, I came up with the same lighting idea as you describe and have been developing full featured renderer for an engine I'm working on by using the technique. While back my ex-collegue mailed me saying "Isn't this light pre-pass rendering technique?", so I guess there's nothing new under the Sun, eh? (:

ReplyDeleteI store normal + glossiness factor to the 32-bit "G-buffer" and have two different light buffers: one for diffuse and one for specular, which are used by the material shading as input textures, so specular lighting isn't a problem. This seems to work great for the few different light types I have implemented so far (directional, omni, spot & line light).

There are few screenshots available (http://www.spinxengine.com/index.php?pg=news) and I should release few more soon now that shadows & ssao have been implemented as well. The engine is developed as an open source project so you can try it out if you like (requires VS2008 & DX9).

I don't see how this improves over basic deferred shading.

ReplyDeleteYou need a separate full-screen texture for each light source. In basic DS, you need one extra buffer for albieto/material (in addition to color/normal). Then multiple lights can be done without extra buffers. In this approach, you need buffers for every light, so seems like it would be slower/consume memory, esp. with lots of lights.

Also, its not clear how shadows would be incorporated, since you would still need to make multiple screen-space passes after the light pre-passes to include the shadowing term.

Maybe i'm missing something?

<<<

ReplyDeleteMaybe i'm missing something?

<<<

Yep you are missing the whole idea :-)

Instead of reading four full-screen -maybe MSAA'ed- render targets you read two for each light. You can pack light properties pretty tightly into the light buffer ... so you might get away with a 8:8:8:8 light buffer. I actually do.

So it takes much less memory bandwidth per light and because you do not calculate the whole lighting equation for each light while you render into the light buffer it is also cheaper. So overall you can render much more lights ... probably 2 - 3x. My tests show that you save especially on small lights. You can run very high numbers like 512 or 1024 depending on your platform this way.

Obviously MSAA is easier and material variety is better ... check out the slides for this.

oh and regarding shadows: you treat them in the same way as in a Deferred renderer. So for the directional light shadows you re-construct the world position and then fetch your shadow atlas. For point and spotlights you do the same ... while you render them. So exactly the same as in a Deferred renderer ... nothing special here.

ReplyDeleteJason Bracey from jviper2004002@hotmail.com. Is the source/sample demo still avilable?

ReplyDeleteHey!

ReplyDeleteThe light pre-pass renderer is brilliant idea , it's fast and has even a really good quality!I used a light pre-pass renderer in my engine and the results are pretty good.

thx,

Jesse

PS: Sry for my bad english... ;)

another stupid trick for edge detection pass on platforms that support sampling the MSAA surface with linear sampling: sample the normal buffer twice, once with POINT sampling and once with LINEAR sampling. Use clip(-abs(L-P)+eps). The linear sampled value should be used to compute the lighting of "non-MSAA" texels in the same shader to avoid an extra pass.

ReplyDeleteThat sounds very cool. What is eps?

ReplyDeleteoops sorry: eps = epsilon

ReplyDeleteThe idea is to use a small treshold value to bias the texkill test so that when the multisampled normals are only a little different then we could use the averaged value to perform the lighting at non-MSAA resolution during the first pass as an optimization.

I was wondering if anyone ever used a trick like the following to access samples values from MSAA surface using DX9 on PC.

ReplyDeleteFor example with MSAA2X we have two samples A & B we can't read, but can we:

- resolve to a non-MSAA GBuffer (Gleft)

- draw a fullscreen black quad with alpha to coverage enabled and that outputs a constant alpha value (but alpha write disabled) for a 50% coverage so as to mask half the texels (=> half the texels are now black)

- resolve again into another GBuffer (Gright)

Gleft = (A+B) / 2

Gright = (0+B) / 2 (or (A+0)/2)

=>

sample B = 2*Gright

sample A = 2*Gleft - B

The only problem is the downscale pattern used by the resolve operation. On consoles we can choose between different modes (square, rotated...) but I couln't find any information about this for dx9 PC :(

If it works, we could render like this:

- render geometry once with MSAA2X, depth buffer test & write, albedo buffer RGB in .rgb + edge in .a (using centroïd trick)

- render geometry again with MSAA2X, depth buffer test only, normal XY in .rg, matID (or packed material params) in .b and depth in .a

- resolve MSAA buffer into two non-MSAA textures if needed (or resolve "on-the-fly" during lighting if possible)

- use the centroïd edge information to clip texels that need supersampling else just compute lighting accumulating albedo and lighting into the non-MSAA buffer

- then compute supersampled lighting onto remaining texels, still accumulating albedo and lighting into the non-MSAA buffer.

Hello, I really like this technique and have sarted putting something together. I have now stumbled onto a problem with normal-maps, or any other mapping which fiddles with the normal vectors.

ReplyDeleteHow do you go about implementing a simple normal mapping technique in the context of the pre-light rendering pipeline?

I am assuming that we don't want to read-in the normal-map during the depth pass.

Best regards, Dan.

It would be super helpful if someone could post screenshot of what MSAA looks like with this technique. The worst case I can think of is something like this:

ReplyDeleteHigh albedo object in low amount of light, on top of a low albedo object in high amount of light.

MSAA with centroid sampling should be pretty good I but there will still be artifacts and I'm interested how bad they are.

Eric

Hi,

ReplyDeleteFirst I want to say that I like the pre-light architecture very much and decided to implement it in OpenGL. Unfortunately, I have problems getting rid of all artifacts when using MSAA.

No MSAA:

0_samples.png

Notice that white curved line looks pretty bad without out MSAA, but the tree(green)/floor(blue) edges are rendered well.

With MSAA (8x):

8_samples_nearest.png

Here the curved line is fine but the tree(gree)/floor(blue) edge sampling is quite ugly.

With MSAA (8x) with centroid sampling:

8_samples_centroid_linear.png

I'd say this looks best but still there are tree(gree)/floor(blue) edges which I'd like to get rid of.

Any help/hints to improve the quality are very much appreciated. Maybe I'm simply missing a few OpenGL parameters.

Thanks and best regards,

Michael

This comment has been removed by a blog administrator.

ReplyDeleteHi, where can I download RendererDesign.zip the link no longer works.

ReplyDelete